Yueheng Zeng @ Project 5

This project focuses on implementing a diffusion model to generate images. The diffusion model is a generative model that generates images by iteratively applying a series of transformations to a noise image. The model is trained to generate images that are similar to the training data. The project is divided into two parts. In Part 1, we implement the forward process of the diffusion model and explore different denoising techniques using a pre-trained diffusion model. In Part 2, we train a single-step denoising UNet and extend it to include time-conditioning and class-conditioning.

Course Logo Generated and Upsampled by Diffusion Model

Octo... Dog? 🤣

In this part, we set up the environment and load the pre-trained diffusion model to generate images using seed 180.

Generated Images with num_inference_steps=20

Generated Images with num_inference_steps=40

We can see the quality of the generated images are quite good. They are highly correlated with the text prompts. However, there are some artifacts in the images. After increasing the number of inference steps, there is actually not much difference in the quality of the images. The defects in the images are still present.

In this part, we implement the forward process of the diffusion model.

Generated Images at t = 250, 500, and 750

In this part, we use the classical Gaussian blur filter to denoise the images.

Noisy Image at t = 250 with Gaussian Blur Denoising

Noisy Image at t = 500 with Gaussian Blur Denoising

Noisy Image at t = 750 with Gaussian Blur Denoising

In this part, we use the pre-trained diffusion model to denoise the images with one step.

Noisy Image at t = 250 with One-Step Denoising

Noisy Image at t = 500 with One-Step Denoising

Noisy Image at t = 750 with One-Step Denoising

In this part, we iteratively denoise the images with the pre-trained diffusion model.

Predicted Noisy Images at t = 690, 540, and 390 with Iterative Denoising

Predicted Noisy Images at t = 240, 90 with Iterative Denoising

Test Image, Iterative Denoised Image, One-Step Denoised Image, and Gaussian Blur Denoised image

In this part, we sample images from the diffusion model.

Generated Images

In this part, we use the classifier-free guidance to guide the diffusion model to generate images with the prompt "a high quality photo".

Generated Images with CFG

We can see that the generated images have higher quality than the images generated without CFG.

In this part, we're going to take the original test image, noise it a little, and force it back onto the image manifold without any conditioning.

Campenile

SDEdit with i_start = 1, 3, 5, 7

SDEdit with i_start = 10, 20, 30, and Original Image

Mong Kok

SDEdit with Mong Kok and i_start = 1, 3, 5, 7

SDEdit with Mong Kok and i_start = 10, 20, 30, and Original Mong Kok Image

Victoria Harbour

SDEdit with Victoria Harbour and i_start = 1, 3, 5, 7

SDEdit with Victoria Harbour and i_start = 10, 20, 30, and Original Victoria Harbour Image

In this part, we're going to apply the SDEdit to nonrealistic images (e.g. painting, a sketch, some scribbles), making them look more realistic.

Hand-Drawn Image: Merry Cat-mas!

SDEdit with Merry Cat-mas! and i_start = 1, 3, 5, 7

SDEdit with Merry Cat-mas! and i_start = 10, 20, 30, and Original Merry Cat-mas! Image

Hand-Drawn Image: Monster

SDEdit with Monster and i_start = 1, 3, 5, 7

SDEdit with Monster and i_start = 10, 20, 30, and Original Monster Image

Web Image: Minecraft

SDEdit with Minecraft and i_start = 1, 3, 5, 7

SDEdit with Minecraft and i_start = 10, 20, 30, and Original Minecraft Image

In this part, we're going to implement inpainting. To do this, we can run the diffusion denoising loop. But at every step, after obtaining the denoised image, we can force the pixels that we do not want to change back to the original image.

Campanile

Original Image, Mask, and Inpainted Image

Street

Original Street Image, Street Mask, and Inpainted Street Image

Victoria Harbour

Original Victoria Harbour Image, Victoria Harbour Mask, and Inpainted Victoria Harbour Image

In this part, we will do the same thing as SDEdit, but guide the projection with a text prompt. This is no longer pure "projection to the natural image manifold" but also adds control using language.

Campanile -> "a rocket ship"

SDEdit with "a rocket ship" and i_start = 1, 3, 5, 7

SDEdit with "a rocket ship" and i_start = 10, 20, 30, and Original Image

Octocat -> "a photo of a dog"

SDEdit with "a photo of a dog" and i_start = 1, 3, 5, 7

SDEdit with "a photo of a dog" and i_start = 10, 20, 30, and Original Octocat Image

Hoover Tower -> "a rocket ship"

SDEdit with "a rocket ship" and i_start = 1, 3, 5, 7

SDEdit with "a rocket ship" and i_start = 10, 20, 30, and Original Hoover Tower Image

In this part, we are going to implement Visual Anagrams. We will create an image that looks like something else when flipped upside down. The only modification to the original iterative denoising is that we will calculate \(\epsilon\) as follows: \[ \epsilon_1 = \text{UNet}(x_t, t, p_1) \] \[ \epsilon_2 = \text{flip}(\text{UNet}(\text{flip}(x_t), t, p_2)) \] \[ \epsilon = (\epsilon_1 + \epsilon_2) / 2 \]

An Oil Painting of People Around a Campfire and An Oil Painting of an Old Man

An Oil Painting of a Snowy Mountain Village and An Oil Painting of People Around a Campfire

A Dreamy Oil Painting of a Crescent Moon Cradling a Sleeping Figure and An Ocean Wave Crashing Against a Lighthouse

In this part, we are going to implement Factorized Diffusion and create hybrid images that look like one thing up close and another thing from far away. The only modification to the original iterative denoising is that we will calculate \(\epsilon\) as follows: \[ \epsilon_1 = \text{UNet}(x_t, t, p_1) \] \[ \epsilon_2 = \text{UNet}(x_t, t, p_2) \] \[ \epsilon = f_\text{lowpass}(\epsilon_1) + f_\text{highpass}(\epsilon_2) \]

Hybrid Lithograph of a Skull (Low) and Waterfalls (High)

Hybrid Image of an Ancient Clock Face (Low) and Historical Moments (High)

Hybrid Oil Painting of an Old Man (Low) and a Snowy Mountain Village (High)

In this part, we are going to design a course logo using the diffusion model with prompt "A man whose head is a camera of brand CS180".

Course Logo (Upsampled)

The man in the logo looks cool! However, the CS180 brand is not on the camera (it might be caused by the word CS180 is not shown in the training data).

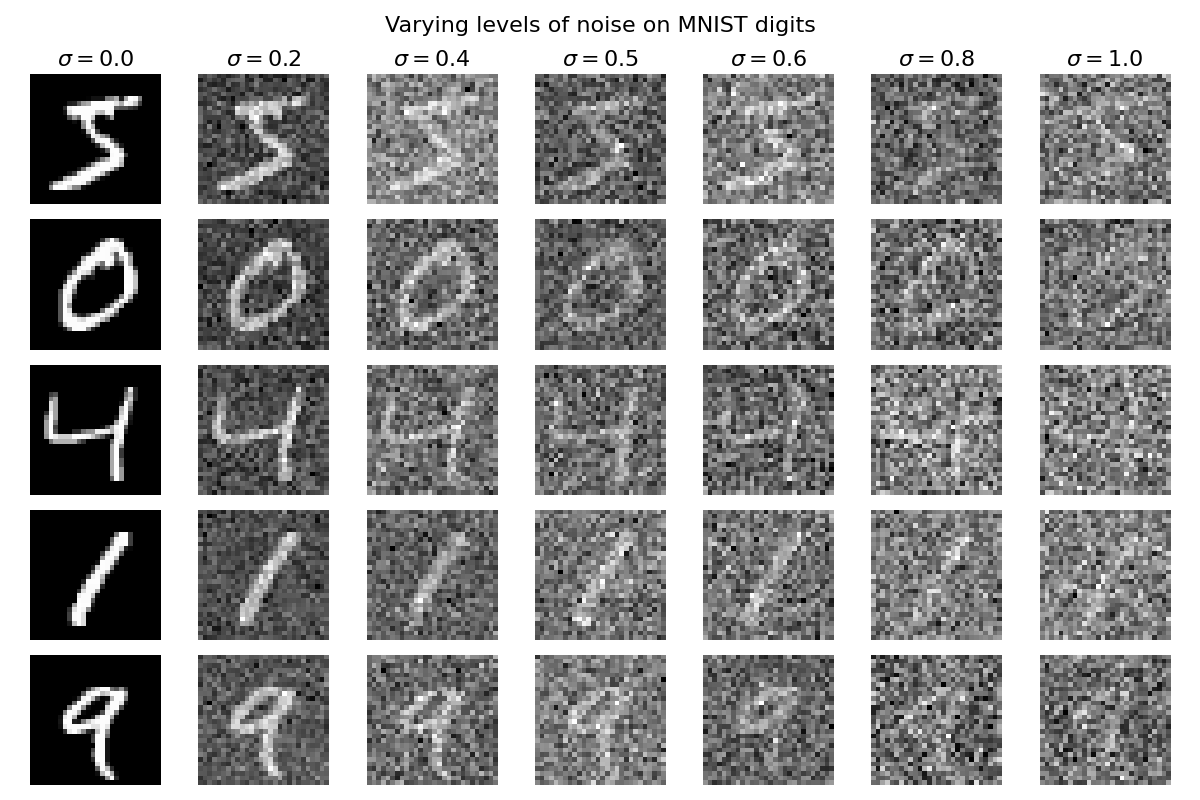

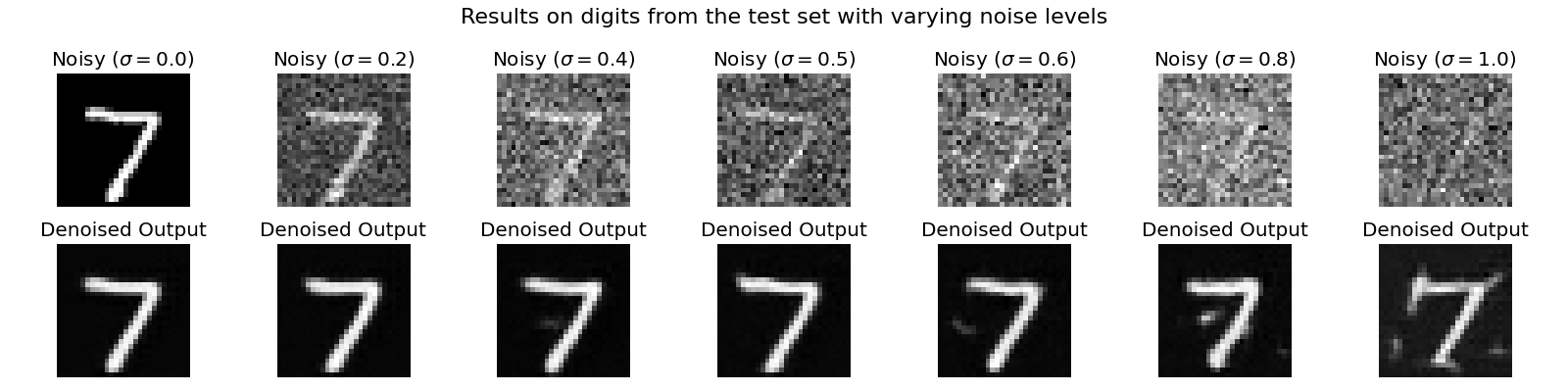

In this part, we are going to train a single-step denoising UNet to denoise the digits in the MNIST dataset. Firstly, we will need to implement the noising process defined as follows: \[ z = x + \sigma \epsilon,\quad \text{where }\epsilon \sim N(0, I) \]

Varying levels of noise on MNIST digits

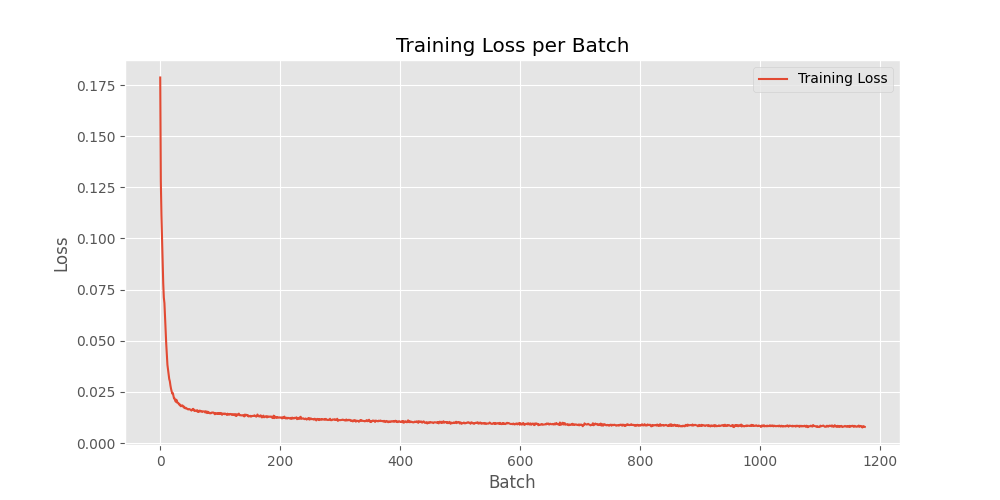

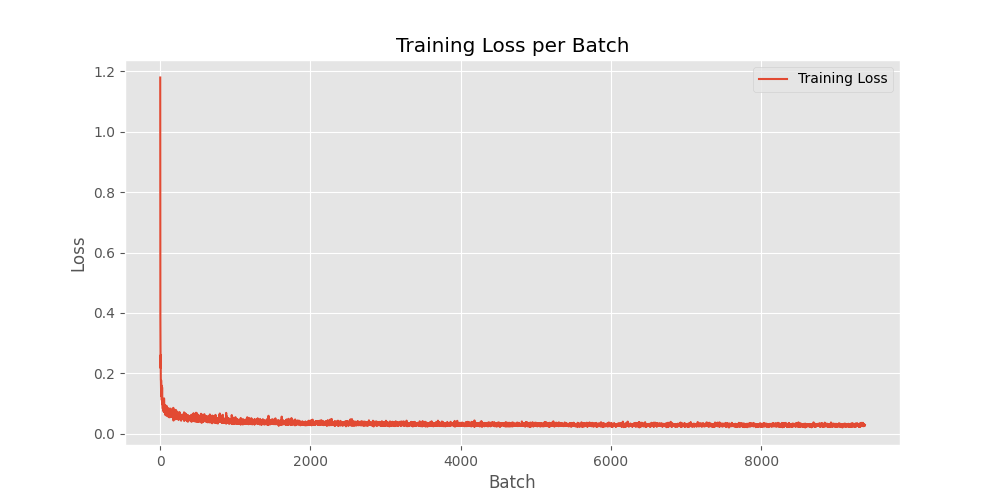

Then we will train a single-step denoising UNet to denoise the noisy digits at \(\sigma = 0.5\).

Training Loss per Batch

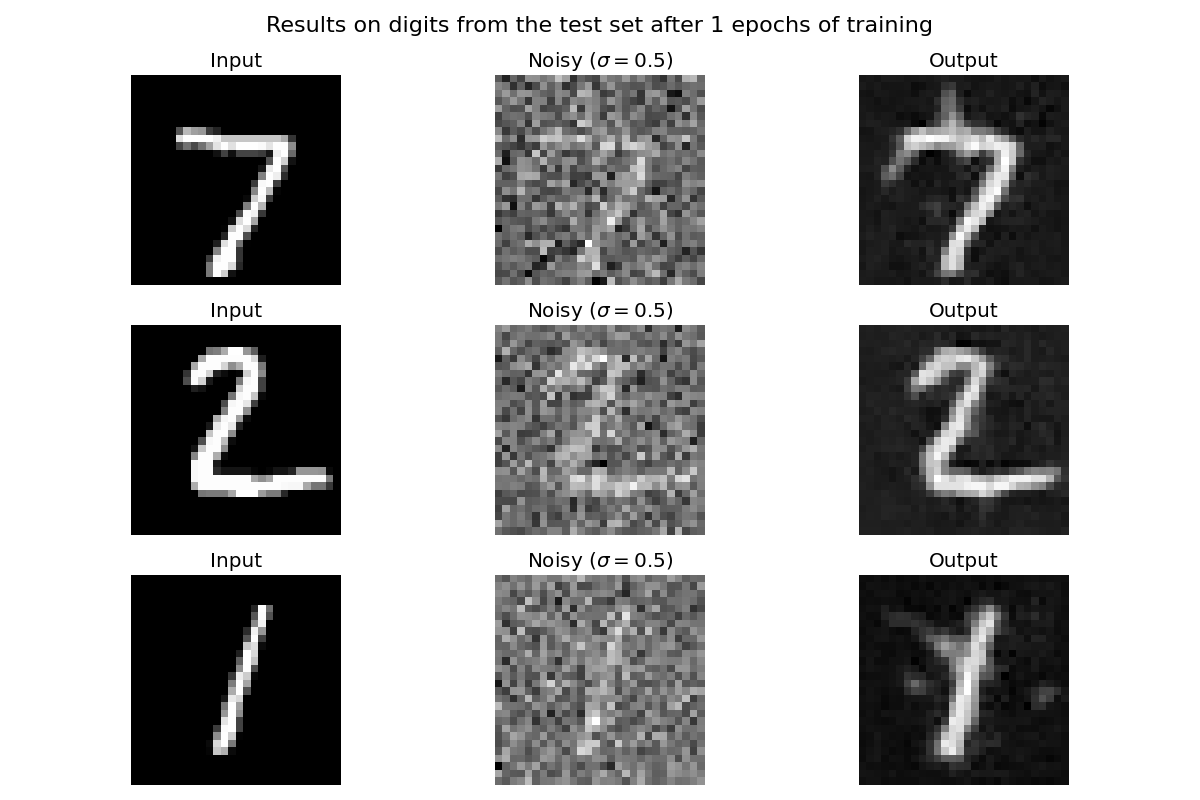

Results on digits from the test set after 1 epochs of training

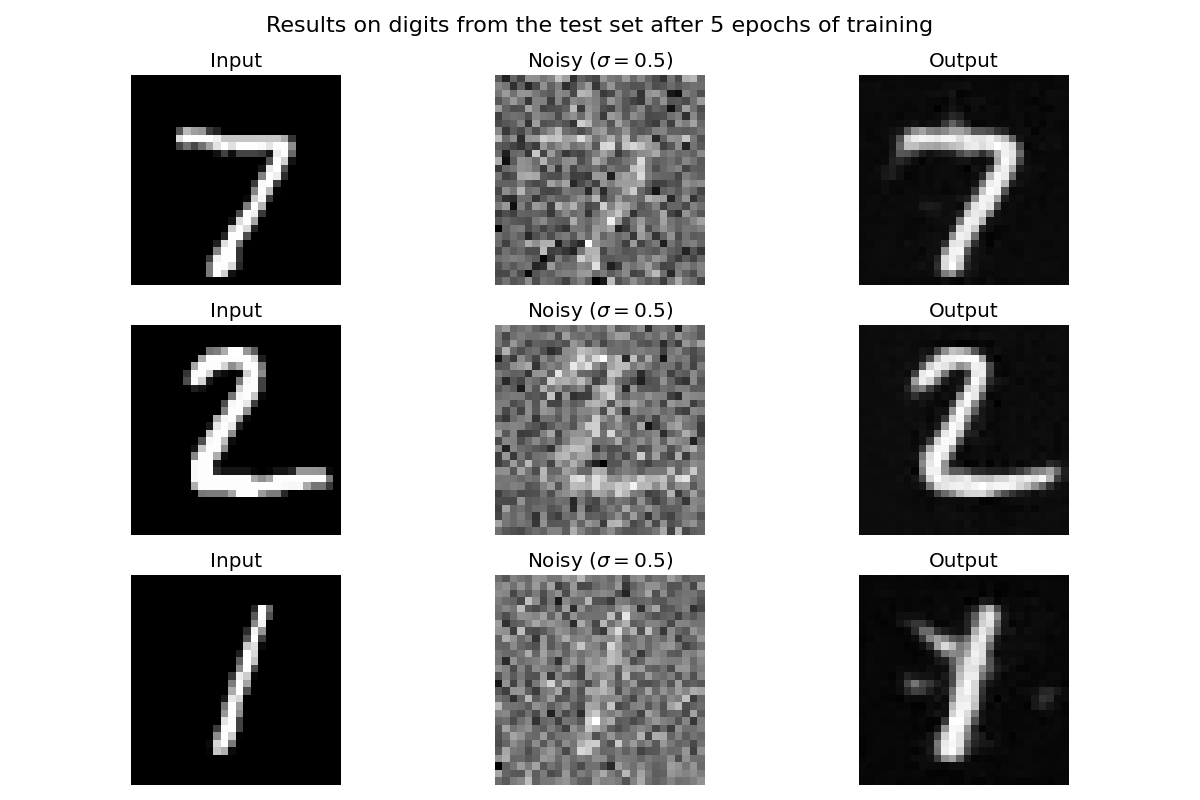

Results on digits from the test set after 5 epochs of training

We can see that the denoising UNet can denoise the noisy digits effectively after 5 epochs of training. However, what if we let it denoise the digits with different levels of noise that it was not trained on?

Results on digits from the test set with varying noise levels

We can see that the denoising UNet cannot denoise the digits effectively with noise levels that it was not trained on, especially when the noise level is high.

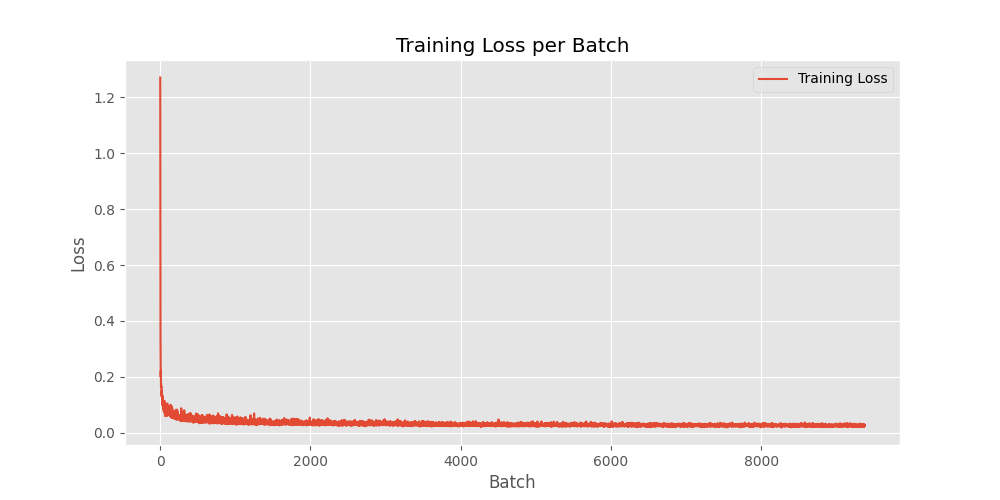

In this part, we are going to add time-conditioning to the UNet, making it a diffusion model. Firstly, we will add the noise with the following equation: \[ x_t = \sqrt{\bar\alpha_t} x_0 + \sqrt{1 - \bar\alpha_t} \epsilon \quad \text{where}~ \epsilon \sim N(0, 1) \] And our objective is to minimize the following loss function: \[ L = \mathbb{E}_{\epsilon,x_0,t} \|\epsilon_{\theta}(x_t, t) - \epsilon\|^2 \]

Training Loss per Batch

After training the diffusion model, we can sample high-quality digits from the model iteratively.

|

After 5 epochs

|

After 20 epochs

|

Sampling digits from time-conditioned UNet after 5 and 20 epochs iteratively

In this part, we are going to add class-conditioning to the UNet, enabling us to specify the which digit we want to generate.

Training Loss per Batch

After training the class-conditioned diffusion model, we choose which digit to generate and sample high-quality digits from the model iteratively.

|

After 5 epochs

|

After 20 epochs

|

Sampling digits from class-conditioned UNet after 5 and 20 epochs iteratively

This part has already been completed in the previous parts.